Machine learning has emerged as a powerful tool in the era of artificial intelligence, offering valuable insights and predictions from large and complex data sets. It’s generally categorized into three broad types: supervised learning, unsupervised learning, and reinforcement learning. An integral part of deep learning, a subset of machine learning, is the concept of neural networks.

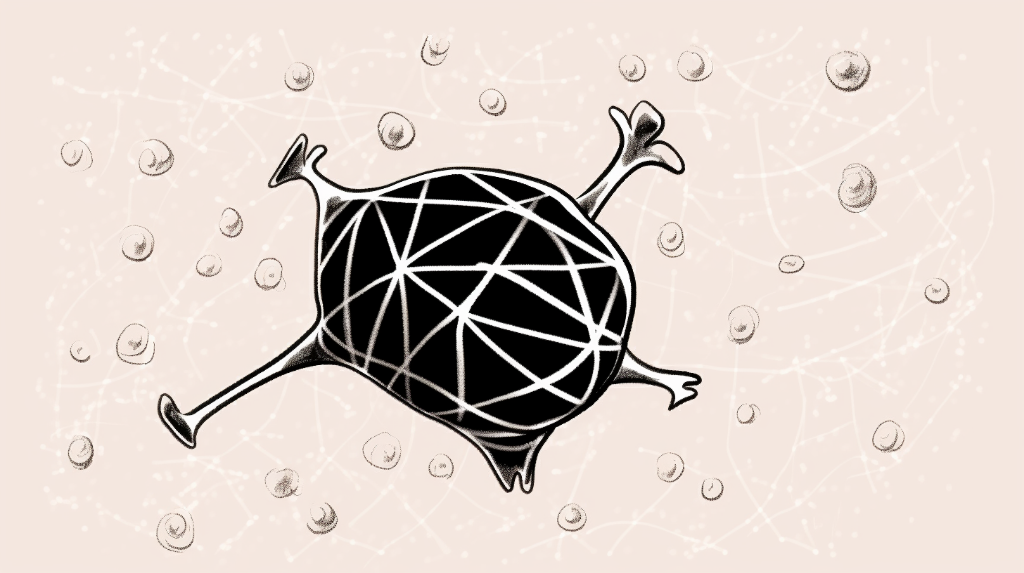

Neural networks, particularly artificial ones, are computational models inspired by the human brain’s structure. They are designed to recognize patterns and make predictions by mimicking the way neurons operate within the brain. One specific type of artificial neural network that has gained considerable attention is the Recurrent Neural Network (RNN).

RNNs are a unique class of artificial neural networks designed to recognize patterns in sequences of data, such as text, genomes, handwriting, or spoken word. They are particularly useful for tasks where context matters, as they have a form of ‘memory’ that allows them to take into account previous inputs while processing new ones. Let’s dive into the fascinating world of RNNs.

The Architecture of Recurrent Neural Networks

Recurrent Neural Networks are built in a layered structure, with each layer consisting of several interconnected nodes, also known as neurons. Each node within a layer is connected to every other node in the subsequent layer, allowing information to flow in a specific direction. This information flow is a crucial aspect of the learning process in RNNs.

The architecture of RNNs is designed in such a way that it can use its internal state (memory) to process sequences of inputs. This feature sets them apart from other neural network architectures and enables them to deal with varying input lengths and retain information over time.

The Unique Feature of RNNs

What truly sets RNNs apart from other neural networks is their ‘memory’. Unlike feedforward neural networks, which process inputs independently, RNNs take into account the ‘history’ of previous inputs in their computations. But how do they do this?

RNNs achieve this by using their internal state to process sequences of inputs. This means that the output for a given input not only depends on that input but also on a series of previous inputs. The network’s ability to remember past inputs while processing new ones gives it a form of ‘memory’. This makes RNNs particularly useful for tasks that involve sequential inputs with dependencies between different parts of the sequence.

Breaking Down the Working of RNNs

Have you ever wondered how Recurrent Neural Networks (RNNs) function? Well, the process is quite fascinating. It all starts with the concept of the training phase and testing phase in machine learning. What happens in these phases? During the training phase, the RNN is provided with inputs and expected outputs (labels). The weights of the RNN are then adjusted to minimize the difference between the predicted and actual outputs. This is a crucial step in the learning process of the RNN.

The Importance of Weights in RNNs

What exactly are these weights we just mentioned? Simply put, weights in an RNN determine the degree of influence a particular input (or neuron) can have on the output. These weights play a significant role in the network’s ability to accurately process and predict data. Without them, the RNN would not be able to learn and adapt to the input it receives.

But, how are these weights adjusted? The answer is through a process called backpropagation. This process involves the network adjusting its weights based on the error it produced in the previous iteration. So, in essence, the network learns from its mistakes!

The Role of Activation Functions

Another essential component of RNNs is the activation function. These functions introduce non-linearity into the network. But why is this important? It allows the network to learn more complex patterns in the data. Without activation functions, the network would only be able to process linear relationships, limiting its usefulness.

Common activation functions include the sigmoid, tanh, and ReLU (Rectified Linear Unit) functions. Each of these functions has its own unique properties and uses within the network.

Applications of RNNs

Now that we’ve learned how RNNs work, let’s look at some of their applications. You might be surprised to learn that RNNs are all around us!

RNNs are widely used in natural language processing (NLP). This includes applications like language translation, sentiment analysis, and text generation. They are also used in speech recognition systems, such as the voice assistants on our smartphones.

Another interesting application of RNNs is in stock price prediction. By analyzing past trends, RNNs can make predictions about future stock prices. However, it’s important to remember that while these predictions can be surprisingly accurate, they’re still just predictions. So, always do your research before making any investment decisions!

The Challenges of Training RNNs

Training Recurrent Neural Networks (RNNs) is not always a walk in the park. There are quite a few challenges that data scientists and machine learning engineers often face. Now, you might wonder, what are these challenges? Well, let’s explore them.

The two main issues that often arise are the problems of ‘vanishing gradients’ and ‘exploding gradients’. These problems can significantly hinder the learning process of an RNN. But what do these terms mean?

Vanishing gradients: This problem occurs when the gradient of the loss function approaches zero. As a result, the weights of the neurons in the network are updated very slowly, leading to a long, drawn-out training process. This makes it difficult for the network to learn and capture long-range dependencies in the data.

Exploding gradients: On the flip side, the exploding gradients problem arises when the gradient of the loss function becomes too large. This results in a large update to the weights during the training process. Consequently, the model’s performance can become erratic and unstable, making it hard to achieve a good fit to the data.

| Challenge | Description |

|---|---|

| Vanishing Gradients | The gradient of the loss function approaches zero, leading to slow weight updates and prolonged training time. |

| Exploding Gradients | The gradient of the loss function becomes too large, resulting in large weight updates and unstable model performance. |

Solutions to Overcome the Challenges

Now that we’ve identified the challenges, it’s time to look at some solutions. Thankfully, the field of machine learning has come up with several techniques to address these issues. These include methods like gradient clipping, use of non-saturating activation functions, and advanced RNN architectures like LSTM (Long Short Term Memory) and GRU (Gated Recurrent Units).

Gradient Clipping: This is a technique used to prevent exploding gradients. It involves setting a threshold value, and if the gradient exceeds this value, it is set to the threshold. This effectively limits the maximum value of the gradient, thereby preventing the weights from getting too large.

Non-saturating Activation Functions: These are activation functions that do not squash their input into a small range. This helps in mitigating the vanishing gradients problem as the gradients are less likely to become too small.

LSTM and GRU: LSTM and GRU are variants of RNNs that are specifically designed to combat the vanishing gradients problem. They introduce the concept of gates and a memory cell. These features allow them to remember long-term dependencies and thus, they can learn from data where the important features are separated by large time steps.

The Future of RNNs

The future of Recurrent Neural Networks (RNNs) certainly looks promising. As the field of artificial intelligence continues to evolve, researchers are tirelessly working on improving the performance and reliability of these networks. The advancements are not just focused on mitigating the existing challenges such as vanishing and exploding gradients, but also on enhancing the overall computational efficiency and learning capability of these networks.

Furthermore, the application of RNNs is expanding beyond the realms of natural language processing and speech recognition. With the advent of more powerful computational resources and sophisticated learning algorithms, RNNs are gradually being employed in complex tasks such as real-time translation and autonomous driving. Can you imagine the impact this could have on our lives?

However, it is important to remember that while RNNs offer a lot of potential, they are just one piece of the broader AI puzzle. They need to be complemented with other machine learning techniques and models to truly realize their full potential.

Final thoughts on RNNs

As we conclude this blog, let’s revisit some key points. Recurrent Neural Networks, with their unique ‘memory’ feature, have been instrumental in revolutionizing the field of deep learning. They have given machines the ability to understand patterns in sequences of data, thereby opening up a world of possibilities in areas like natural language processing, speech recognition, and even stock price prediction.

However, as with any technology, RNNs come with their own set of challenges. The issues of vanishing and exploding gradients have been a bottleneck in optimizing the performance of these networks. Despite these challenges, solutions such as LSTM and GRU have shown promise in overcoming these hurdles.

The journey of learning and experimenting with RNNs is a fascinating one. It’s a journey that involves not just understanding complex algorithms, but also appreciating the sheer power of what these algorithms can achieve. So, are you ready to be part of this exciting journey?

Additional Resources for Learning

- Books:

- Deep Learning by Ian Goodfellow, Yoshua Bengio, and Aaron Courville

- Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow by Aurélien Géron

- Online Courses:

- Deep Learning Specialization by Andrew Ng on Coursera

- Introduction to Deep Learning by Higher School of Economics on Coursera

- Articles:

- “Understanding LSTM Networks” by Christopher Olah

- “The Unreasonable Effectiveness of Recurrent Neural Networks” by Andrej Karpathy